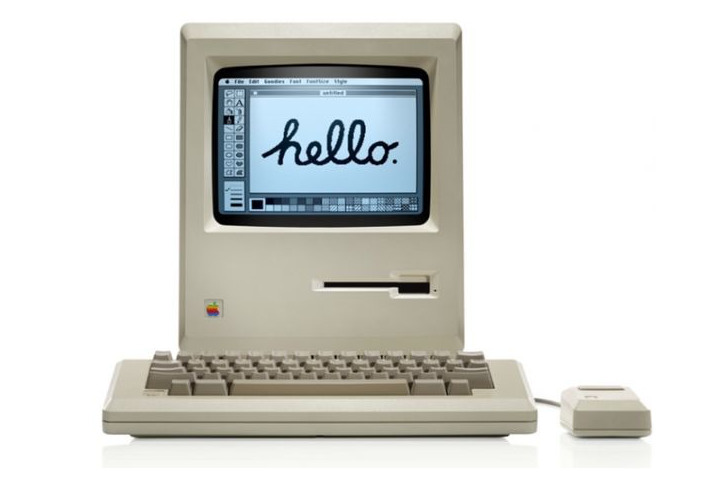

Sal's Signature

T he first macintosh, said hello - so must Sal. Whenever the user opens the App, Sal's signature will animate across the screen. The mechanics of it end up being pretty similar to the FFT wave. There are two types of Bezier curves: quadratic and quintic. The wave uses a quintic curve because its a little easier to get the slope is zero properties at the peaks and valleys. The signature uses a quadratic curve as it is easier to get the smooth properties of handwriting. Rendering a curve requires a series of points for the curve to pass through. Because it's a signature, ( surprise, surprise) I needed a signature. I've taken inspiration from famous people, of course, having the 'S' be massive and the 'a' be small. It had to be drawn in one continuous line which makes the animation prettier. In addition, i t needed to start at mid height on left and finish at mid height on the right. After an hour in desmos, each of the 32 points (below) was loaded into a ...